We have all heard about, argued about, and complained about the removal of DE from Vail. Based on the possibility that we will have to find other ways to store massive amounts of data, I wanted to experiment with RAID as that seems like the most likely candidate to DE. Here are some initial impressions, results and opinions on my first phase of RAID testing. In order to simulate Vail, I used Server 2008 R2 and used the software RAID support in the OS as my baseline.

Hardware

I used 4 WD 2T Green drives (Advanced Format) with no jumpers or special configuration matched up with the the Gigabyte GA-H55M-USB3 motherboard. I connected all the drives for the array to the on board Intel controller and the boot drive (750 Caviar Black) to the J-Micron controller.

Setting up the RAID

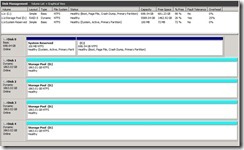

To configure the array, I used the built in wizard in Server 2008 and created a RAID 5 array from the 4 WD drives. Combining the volumes was pretty quick however a complete synching of the array for parity took 4 days! Approximately 1.1% complete per hour. Keep in mind that the storage is usable during this time but your data is not protected so use at your own risk. Once complete, this is what I saw in the drive manager.

Speed impressions and results

The first thing you notice once the array is completed is the server is very responsive and pretty fast at both reading and writing of data. As you can see from picture below, (pictures show writing to array not reading from it) transferring large files is very fast ranging from 90-100 MBs continuous with minimal fluctuation. For a short time I hit sustains speed of 105 MBs which is very close to pushing your gigabit limit. Keep in mind also that these tests where run transferring data from WHS V1 to this test server. When I ran some follow-up tests from 2008 R2 to a PC with an SSD I hit about 110 MBs.

Overall Impression

The biggest issue in the method I used is expandability. For starters, using on board controllers with software raid does not let you add drives without rebuilding the array which effectively kills your data.. In addition, you “should” have matched drives (meaning type, size, firmware) to prevent issues. Using a dedicated controller card does allow you expand the RAID, however dedicated cards are very expensive ($300+ for a simple 8 port controller). This means that you will have to pre plan your server for the future in the very beginning, making your price of entry very expensive. My test server has 4 2T green drives (EARS) in a RAID 5 array which would add roughly $400 to your initial build if you wanted a server with about 6T of storage. The only upside is that the RAID 5 configuration is very very fast compared to drive extender so if you can live with very little options for expandability you will love the performance. All in all, I would not trade DE for a faster RAID configuration as the lack of flexibility is a real deterrent for me. In part 2 will cover an experiment with a dedicated RAID controller.