I have been testing and using storage spaces on Sever Essentials since its release. I am using it to back up two systems as well as for some Movies, Music, and Photos. Mostly testing and very light use. On Tuesday I got a bit of a surprise I did not expect.

Before I get into the details of the error, let me first explain the setup. I have storage spaces set to “Parity” mode using 3 Western Digital Green Drives. All three drives are connected to a Highpoint 2680 (non raid) and are pooled together in one 3.7T parity Volume using only Storage Spaces. I did not load any of the highpoint software and the drives are configured in legacy mode. This is hooked in a dedicated box using a Gigabyte H55 board with I3-530. The drives have been configured this way since I put the box together and started using Server 2012 Essentials.

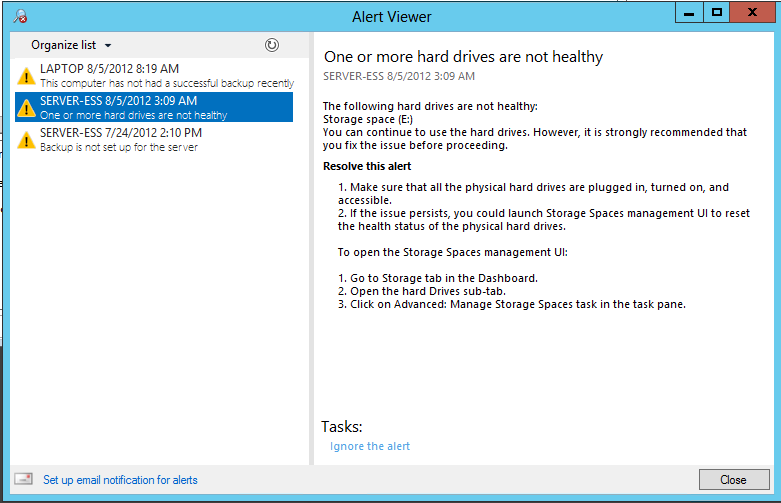

On Tuesday, I woke up to the whaling sound of an alarm. When I ran to my office, I assumed that it would be a UPS however it turned out to be the built in alarm on the Highpoint card. The odd thing is that the drivers for that card where not loaded nor was this card configured in RAID. I was very surprised the alarm even went off, but mostly I was curious as to why. I opened the dashboard and saw that I had an error in my storage spaces. It told me that one or more drives was not healthy however it did not tell me which one or why. Looking a bit further, I saw that it was the storage spaces drive but the information it provided was not very informative. The warning only told me to go the Storage Spaces and mange my storage. I did notice one time where it gave me the option to repair it and it ran a check disk on all three drives and found no errors. Shortly after that it began a repair process which took many hours to complete. When it was complete it still did not tell me anything other than it was completed (not much help). Since check disk showed OK, and it was rebuilding, I was unhappy with not knowing what happened so during the rebuild process I decided to load the WebUI from Highpoint to view the health of each drive. I looked the properties of each drive and all appeared to be good so it did not show any errors or health conditions.

The troubling thing about this is that it happed for no reason. The system did not loose power and is hooked up to a UPS. None of the drives showed any Smart or health issues. The controller did not flag any errors of any type, the event viewer did not show any problems either, yet the volume found it necessary to do a 6 hour rebuild. There are several things that concern me about this little issue. First, there does not appear to be any way to know which drive is causing the problem. Had this been a production system, I would not be able to identity which drive in the system is causing the problem. Even if it flagged a drive as bad, there does seem to be anyway to track that back to a specific controller port or drive location. Second, the unexplainable reason for the rebuild in the first place. What caused it and why? They should tell me something. Lastly, since this has only been running for a bout 10 days, the robustness of storage spaces is now a big concern to me. I have never seen this happen in two years of running RAID 24/7. This might be a fluke, however it throws doubt for me as to how stable and robust this really is. Granted I did not loose data, but this is still a bit too flaky for me to trust right now. I definitely would wait for the first service pack if you are going to trust you data entirely to Storage spaces.

[…] Mike’s recent SS failure doesn’t reassure BYOB that it’s ready for you data. […]

[…] make sense on a home version of this product given the failures I have had using Storage Spaces (Essentials version and this one) and what appears to be questionable portability between systems. It is possible […]

[…] make sense on a home version of this product given the failures I have had using Storage Spaces (Essentials version and this one) and what appears to be questionable portability between systems. It is possible […]